Mathematics For Machine Learning: Mathematical Intuition Basics

Mathematics is the core of many sciences, including machine learning and data science. But how exactly does it help us solve such complex problems? What happens behind the scene that allows us to teach computers to perform tasks that seemed impossible decades ago? The answer lies in the power of mathematics, which provides the tools and frameworks needed to model, analyze, and solve these problems. In this tutorial on mathematics for machine learning, we will build mathematical intuition, exploring how core mathematical concepts drive the algorithms and techniques we use today. We will focus on the fundamentals as these concepts are often overlooked in machine learning courses, preventing learners from understanding the core principles behind the ML algorithms.

Understanding these concepts not only unlock (to some extent) the “black box” nature of machine learning models but also empowers us to make better decisions when designing and tuning them. Machine learning problems are often dealt with in n-dimensional space due to the nature of data, unlike the 2D or 3D spaces we commonly imagine. However, the concepts we learn for 2D space can be expanded to n-dimensional space, along with the intuition for what is happening. So, let’s start from scratch.

Table of Contents

Data – The Fuel Of Machine Learning Algorithms

Data is the main fuel that helps machine learning algorithms solve complex problems. By representing data such as images, audio, or text in numerical terms, we can perform mathematical operations on them. This is exactly what we do: first, we find a way to represent various data types using numbers, and then we apply mathematical algorithms to learn patterns.

Let’s consider a very simple tabular data. This data has a rows and columns. Each column represents a different feature of the data. Each row corresponds to an individual or a data point with specific values for each feature.

| Age | Height | Weight |

|---|---|---|

| 25 | 180 | 75 |

| 30 | 165 | 60 |

| 22 | 170 | 68 |

| 28 | 160 | 55 |

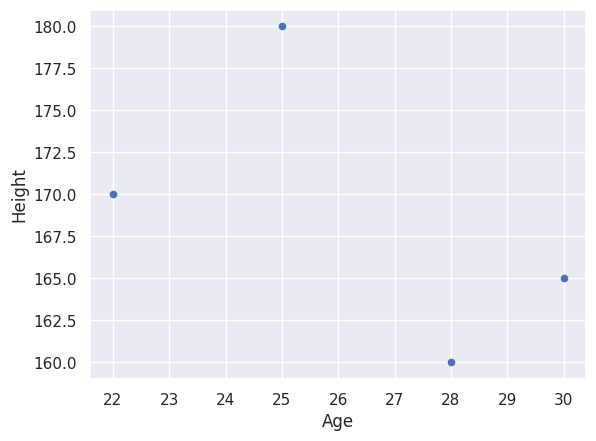

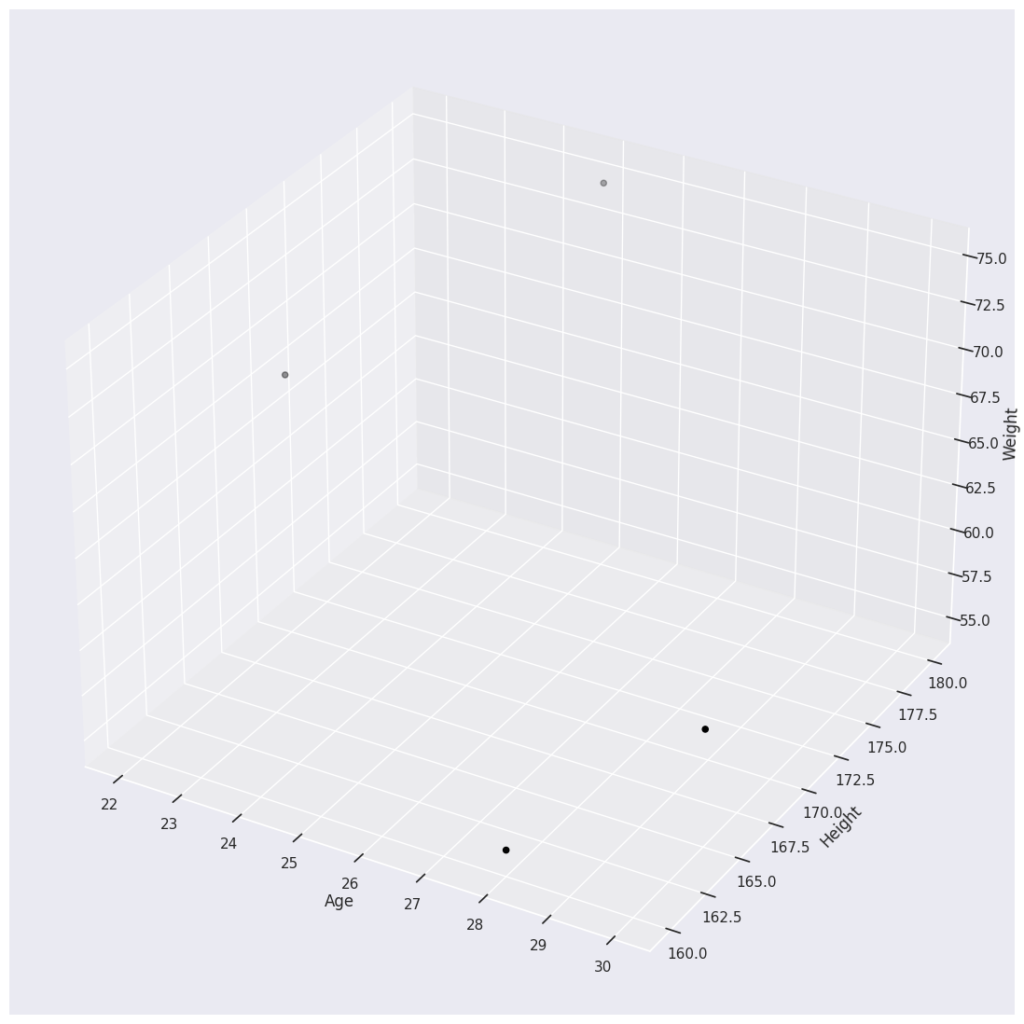

Each row of the table can be considered a single data point because it represents data for an individual person. However, we often perform operations collectively on each column to get an overall picture of the dataset. We can represent each column or row as a vector and perform calculations on them. By plotting these vectors, we can visualize their existence in space.

For example, if you take the “Age” column on the x-axis and the “Height” column on the y-axis, you can visualize the relationship between these two features. Similarly, you can take the “Weight” column on the z-axis and visualize the data points in a 3D space. In practice, however, we often deal with datasets containing many rows and columns, making it impossible to visualize the entire dataset in an n-dimensional space. Therefore, we typically select two or three columns for analysis.

Although we conceptualize our data as existing in an n-dimensional space (e.g., 3D space in the case of three features), performing mathematical calculations efficiently on this data collectively is crucial. This is where matrices come into play. By representing the data in a matrix form—with rows as vectors of individual data points and columns as feature vectors—we can perform calculations on the entire dataset efficiently.

The entire dataset can be represented as a matrix, where each row is a vector (data point), and each column is a feature. This structure mirrors how data is often collected and organized in tables or spreadsheets.

[

[25, 180, 75],

[30, 165, 60],

[22, 170, 68],

[28, 160, 55]

]Code language: JSON / JSON with Comments (json)Each row [25, 180, 75] is a data point, and each column represents a feature (Age, Height, Weight). Once, we have our data represented in matrix form, we can perform mathematical calculations. Operations on matrices can be efficiently performed using vectorization, a method where operations are applied to entire arrays or matrices at once rather than iterating over individual elements. This speeds up computations significantly compared to looping through each element.

A lot of things can be done once we have represented our data in matrix form. In neural networks, the weights and activations of neurons are represented as matrices, and operations on these matrices define the learning process. Matrices enable batch processing, where multiple data points can be processed simultaneously, improving computational efficiency and leveraging parallel processing capabilities. Techniques like Singular Value Decomposition (SVD) and Principal Component Analysis (PCA) rely on matrix operations to reduce dimensionality and extract meaningful features from the data. These things are possible because matrices have many beautiful properties.

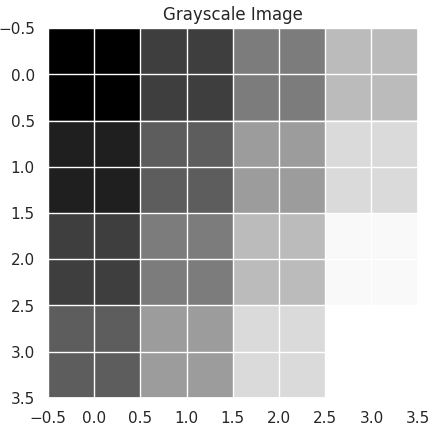

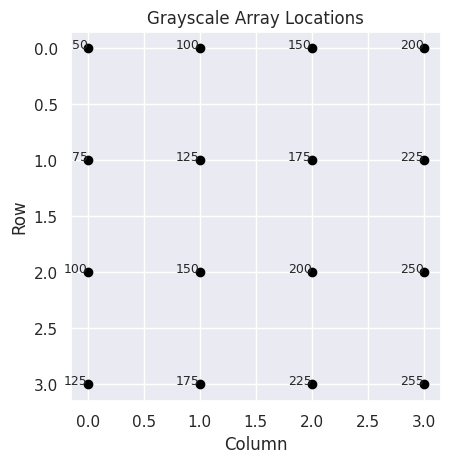

Similar to tabular data, images exist multidimensional space. A grayscale image is represented as a 2D array, where each element represents the intensity of a pixel. The values typically range from 0 (black) to 255 (white) for 8-bit images. Check the image below and see how as the value changes the color distribution changes as well. You can even plot the location of pixels on the graph.

[[ 50 100 150 200]

[ 75 125 175 225]

[100 150 200 250]

[125 175 225 255]]

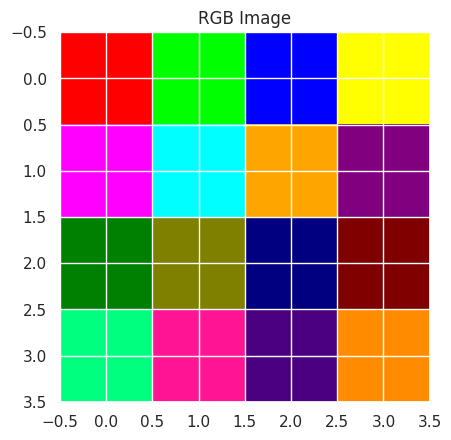

this is a 2D grayscale image matrixCode language: CSS (css)Similarly, you can have RGB images.

RGB Image Array:

[[[255 0 0]

[ 0 255 0]

[ 0 0 255]

[255 255 0]]

[[255 0 255]

[ 0 255 255]

[255 165 0]

[128 0 128]]

[[ 0 128 0]

[128 128 0]

[ 0 0 128]

[128 0 0]]

[[ 0 255 127]

[255 20 147]

[ 75 0 130]

[255 140 0]]]Code language: CSS (css)Tabular data features are typically structured and discrete, with clear semantic meanings. Image features, especially raw pixel values, are unstructured and represent visual information. These pixel values are the basic features of an image. For a grayscale image of 28×28 pixels (like in MNIST digit recognition), you have 784 features (28 x 28). For color images, each pixel has three values (one for each color channel: Red, Green, and Blue). So, for an image of 28×28 pixels, you have 784 pixels but 3 values per pixel, totaling 2352 features.

These pixels create more complex features. At a basic level, features can be simple patterns like edges (where the color changes significantly) or corners. For example, in facial recognition, the edges of eyes, nose, and mouth are important features. At a higher level, features can represent whole objects or significant parts of objects. In a neural network(CNN), early layers detect simple features like edges, while deeper layers detect complex features like eyes, wheels, or even entire faces. When an image is processed by a neural network, it creates feature maps that highlight where certain features (like edges or textures) are found in the image.

Audio signals can be represented as 1D arrays (wave-forms), 2D matrices (spectrograms), or even higher-dimensional representations like Mel-Frequency Cepstral Coefficients (MFCCs). Text data can be converted into numeric representations using methods like Bag of Words (BoW), Term Frequency-Inverse Document Frequency (TF-IDF), word embeddings, and sentence embeddings, discussed in another tutorial on NLP.

Fundamentally, data in various forms (text, images, audio) is first converted into a numeric format (using matrices or arrays) to enable calculations, analysis, and machine learning operations.

Mathematics For Machine Learning: Tools

Once data is converted into a numeric form, mathematics becomes a powerful tool to analyze, interpret, and make decisions based on that data. When working with data, we often aim to make informed decisions or uncover insights. To achieve these goals, we use various tools. Probability theory helps us make predictions by quantifying the likelihood of different outcomes based on our data.

Statistics enables us to analyze and interpret data, revealing patterns and hidden relationships. Since data in ML is frequently represented in matrices, linear algebra provides the necessary framework for performing complex operations on our data. Additionally, we also use calculus to deal with various calculations involved in these two fields. Overall, machine learning uses various mathematical tools not limited to these four fields.

The main area is statistics, which encompasses probability concepts. Linear algebra and calculus then follow, aiding in complex calculations. We will focus on statistics and probability here. Linear algebra is discussed in notes, and algorithm specific tutorials. Calculus is something you are expected to know, at least its basics.

Statistics Basics For Machine Learning

Imagine you’re a researcher trying to understand people’s coffee consumption habits in a large city. You’re interested in discovering not only how much coffee people drink but also the factors influencing their choices. First, let’s start with descriptive statistics. This involves collecting data and summarizing it to get an overview of what’s happening. For instance, you gather information about the average number of cups of coffee people drink daily, the most popular types of coffee, and the most frequented coffee shops. Descriptive statistics help you describe the current situation but don’t tell you about the underlying patterns or causes.

To dig deeper, we turn to inferential statistics, where we make predictions and draw conclusions about the broader population based on a sample. For example, based on the data from a subset of the city’s population, you might infer the coffee consumption habits of the entire city. Inferential statistics allow you to make educated guesses and predictions beyond the data you directly observe. Here the term population refers to all the coffee drinkers in the city.

However, it’s impractical to survey everyone, so you select a sample, a smaller group representative of the population. The characteristics you observe in the sample, like the average number of cups consumed per day, are called statistics. These statistics help estimate parameters, the true values in the entire population, such as the average coffee consumption across all city residents. In many machine learning problems, the goal is mainly to estimate population parameters or understand underlying patterns within a broader population based on the sample data.

As you collect data, you encounter different types of variables. Numerical variables are quantitative and represent numbers, such as the number of cups of coffee consumed or the price paid per cup. These can be further divided into discrete variables (like the number of cups, which you can count) and continuous variables (like the temperature of the coffee, which can take any value within a range). On the other hand, categorical variables classify data into different categories, such as coffee types (espresso, latte, cappuccino) or customer demographics (age groups, gender).

The data you gather can be qualitative or quantitative. Qualitative data refers to descriptive information that categorizes or labels attributes, such as customer satisfaction levels (good, fair, poor). Quantitative data involves numerical measurements, like the amount of coffee consumed. Within quantitative data, you distinguish between discrete (countable, like the number of visits to a coffee shop) and continuous (measurable, like the duration of each visit). You can summarize these using the mean (average cups consumed) or proportion (percentage of people preferring a certain type of coffee).

To ensure your sample accurately represents the population, you must carefully choose your sampling method. Random sampling gives every individual an equal chance of being selected, reducing bias. This can be done through:

- Simple random sampling, where you randomly select participants from the population.

- Stratified sampling, where you divide the population into groups (like age or income brackets) and sample from each group proportionally.

- Cluster sampling, where you divide the population into clusters (like neighborhoods) and randomly select entire clusters.

You might also use systematic sampling, where you select every nth individual from a list, or convenience sampling, where you choose participants who are easy to reach. However, convenience sampling can introduce sampling errors if the sample isn’t representative of the population. Additionally, errors can arise from factors unrelated to the sampling process, known as nonsampling errors, such as misreporting by participants.

Understanding the level of measurement is crucial for analyzing data accurately. The nominal scale level categorizes data without a specific order (like coffee types). The ordinal scale level arranges data in a meaningful order but without precise differences between ranks (like customer satisfaction ratings). The interval scale level allows for meaningful differences between data points but lacks a true zero (like temperature in Celsius – zero degrees Celsius doesn’t mean there’s no temperature; it’s just a reference point). The ratio scale level has all the features of the interval scale plus a true zero (for example, zero weight means there’s no weight at all), allowing for meaningful ratios (like weight or income).

When analyzing data, you might look at the frequency of different responses, the relative frequency (proportion of the total), or the cumulative frequency (running total of frequencies). For example, you might find that 40% of respondents drink coffee daily, representing the relative frequency, and track how these percentages accumulate as you move through different categories.

But how can we describe our data to get meaningful insights? We can get an understanding of our data by using a few things like mean, media and plots. Let’s understand them with examples.

Measures of the Location: Measures of location provide a way to understand the position of a particular value within the dataset. They are useful for understanding the distribution of data and identifying relative standings

Imagine you’re analyzing customer spending data for an online store. You want to understand where individual spending figures lie relative to the entire dataset. This involves several key concepts:

- Percentiles divide the data into 100 equal parts. For example, the 90th percentile indicates that a customer’s spending is higher than 90% of all customers. To find the 90th percentile, you sort all spending values in ascending order and identify the value below which 90% of the data falls.

- Median (although categorized under central tendency) is the middle value when all data points are sorted. If there’s an even number of data points, it’s the average of the two middle values. The median provides a central value that’s not skewed by extreme outliers.

- Quartiles split the data into four equal parts. The first quartile (Q1) is the 25th percentile, the second quartile (Q2) is the median (50th percentile), and the third quartile (Q3) is the 75th percentile. The interquartile range (IQR) is the difference between Q3 and Q1 and measures the spread of the middle 50% of the data.

Outliers are values significantly different from the majority. You can detect them using the IQR: values beyond Q1 – 1.5 * (IQR) and Q3 + 1.5 * (IQR) are often considered outliers.

In machine learning, percentiles and quartiles help in understanding the distribution of features. For instance, if you’re dealing with customer income data, the 25th percentile might show the lower end of the income distribution, and the 75th percentile the upper end. This helps in creating features that capture customer segmentation.

Unlike the mean, which can be heavily influenced by extreme values (outliers), the median provides a more robust measure of central tendency. This is particularly useful in datasets with skewed distributions or outliers. When normalizing or standardizing data, the median can be used instead of the mean to center data, especially in the presence of outliers. For example, using median-based centering can make your data preprocessing more robust. The median can be used to impute missing values in continuous features. This is often preferred over the mean when the data contains outliers or is skewed.

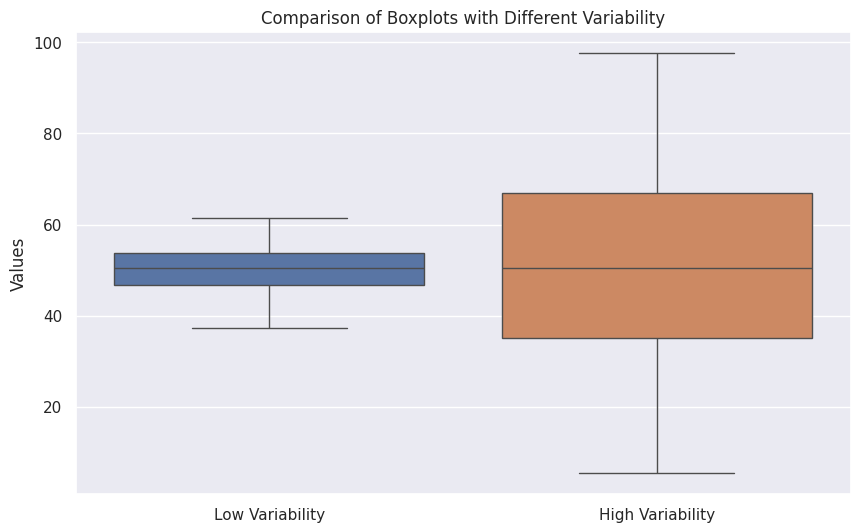

Box-whisker plots (or box plots) can be used to graphically analyze these values. The box represents the Interquartile Range (IQR), which covers the middle 50% of the data. The top and bottom edges of the box are the third quartile (Q3) and first quartile (Q1), respectively. A line inside the box indicates the median (Q2), which divides the dataset into two equal halves. It’s the 50th percentile. The whiskers extend from the edges of the box to the smallest and largest values within 1.5 * IQR from the quartiles. They show the range of the data excluding outliers. Any data points beyond this range are considered outliers and are often plotted as individual points.

If the median line is centered within the box, the data is symmetrically distributed. If it’s skewed towards one side, the data may be skewed. A larger box indicates more variability in the middle 50% of the data. A shorter box indicates less variability.

Measures of Center: (Also known as measures of central tendency) Measures of center provide an indication of the general location of the data points, giving a sense of where the data tends to cluster.

- Mean is the average of all data points. For instance, if you have customer spending data of $100, $150, and $200, the mean is ($100 + $150 + $200) / 3 = $150. It provides a measure of central tendency but can be affected by outliers.

- Mode is the most frequently occurring value. If most customers spend $100, the mode is $100. It’s useful for understanding the most common spending level.

- Median is the middle value and is less affected by outliers compared to the mean. If customer spending data is $100, $200, and $5000, the median is $200, giving a better sense of central tendency when data is skewed.

The mean is sensitive to extreme values (outliers) because it takes into account every value in the dataset. In skewed distributions, the mean tends to be pulled towards the longer tail, hence it shifts in the direction of the skewness. The median is the middle value that divides the dataset into two equal parts. It is less sensitive to outliers and skewed values because it only depends on the order of values. In skewed distributions, the median is located between the mean and the mode. The mode is the most frequently occurring value in the dataset. In skewed distributions, the mode represents the peak or the highest point in the distribution and is typically unaffected by extreme values.

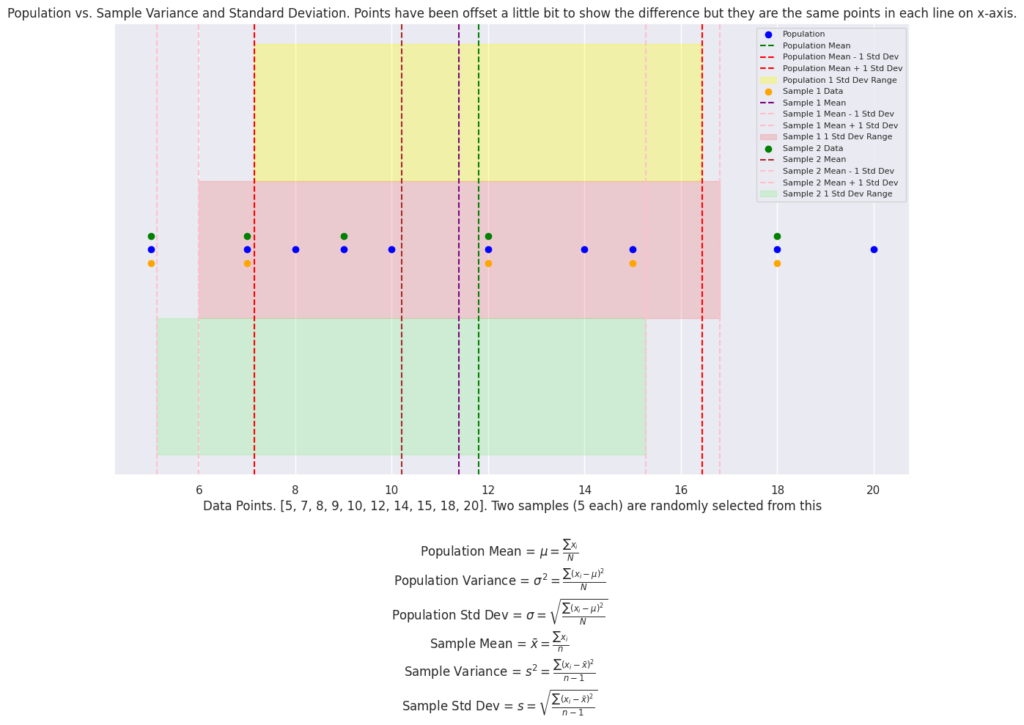

Since it is often impossible to measure the mean of entire population, statisticians rely on the sample mean to estimate the population mean. The population mean (μ) is constant for a given population. The sample mean varies depending on the sample taken (x̄).

Measures of Spread: They describe the extent to which data points in a dataset vary or differ from each other. Unlike measures of central tendency, which focus on locating the center of the data, measures of spread provide insight into the variability and dispersion within the dataset.

At its core, the idea of measuring spread arises from the need to quantify how scattered or concentrated the data points are around a central value. This concept is crucial because two datasets may have the same mean or median but can differ significantly in how their values are distributed. For example, in quality control, understanding variability is as important as knowing the average; a process with high variability might still produce defective items, even if the average quality is high.

Imagine a factory that produces metal rods with a target length of 100 cm. The acceptable tolerance range is between 99.5 cm and 100.5 cm. If the process produces rods with lengths ranging from 98 cm to 102 cm, this indicates high variability. While the average length might still be 100 cm, the range shows that some rods are significantly outside the acceptable limits, leading to a high defect rate.

The most basic measure of spread is the range, which is calculated as the difference between the maximum and minimum values in the dataset. The range provides a simple measure of the total spread of the data but is sensitive to extreme values or outliers, which can distort the true variability of the dataset. Despite its limitations, the range is useful for a quick, rough estimation of data dispersion.

A more sophisticated measure of spread is the variance, which quantifies the average squared deviation of each data point from the mean. The idea of squaring the deviations arises from the desire to eliminate the issue of positive and negative deviations canceling each other out. By squaring these differences, we ensure that all deviations contribute positively to the overall measure, thus providing a meaningful sense of the data’s variability. The variance provides a comprehensive picture of the data’s spread but comes with the drawback of being in squared units of the original data, making it less intuitive to interpret directly.

To address the interpretability issue of variance, the standard deviation was introduced. The standard deviation is the square root of the variance, bringing the measure back to the same units as the original data. This adjustment makes the standard deviation a more intuitive measure of spread, as it directly relates to the original data’s scale. The standard deviation indicates the average distance of data points from the mean, providing a clear and practical sense of variability. The theoretical basis for these measures lies in the desire to understand not just the typical or average behavior of data but also the extent to which individual observations deviate from this norm.

When we have data from an entire population, we calculate the variance by finding the average of the squared deviations from the mean. A deviation is the difference between a data point and the mean. To get the variance, we square these deviations (to avoid negative values canceling out the positive ones), sum them up, and then divide by the total number of data points, N.

When we only have a sample from the population, we need to account for the fact that a sample might not capture all the variability present in the entire population. To correct for this potential underestimation, we divide by n−1 instead of n, where n is the sample size.This adjustment, called Bessel’s correction, compensates for the tendency of a sample variance to underestimate the population variance. By dividing by n−1, we ensure that our estimate is unbiased.

Now that we have a some intuition of these basic concepts related to descriptive statistics. Let’s talk about probability that will help understand more advance concepts related to statistical inference. Probability theory provides the foundation for statistical inference. Statistical methods often rely on probability distributions to model and analyze data.

Probability Basics For Machine Learning

The concept of probability started to take shape with the work of French mathematician Blaise Pascal and Italian mathematician Gerolamo Cardano. Cardano, in the 16th century, explored probability in gambling and games of chance. In the 17th century, Pascal’s correspondence with Pierre de Fermat laid the foundation for probability theory. They discussed problems related to gambling and formed the basis of modern probability theory. The 18th century saw further formalization by mathematicians like Abraham de Moivre and Jakob Bernoulli, who developed key principles of probability and laid the groundwork for statistical theory.

First, let’s talk about an experiment. Think of an experiment as a controlled process or activity where you observe outcomes. For example, let’s say you’re rolling a six-sided die. This action of rolling the die is an experiment. It’s planned and controlled because you decide when and how to roll the die, but the result (which number shows up) is not predetermined—it’s a matter of chance. If the result of an experiment isn’t due to chance, it’s typically a deterministic process rather than a probabilistic one. This means that the outcome is entirely predictable given the initial conditions.

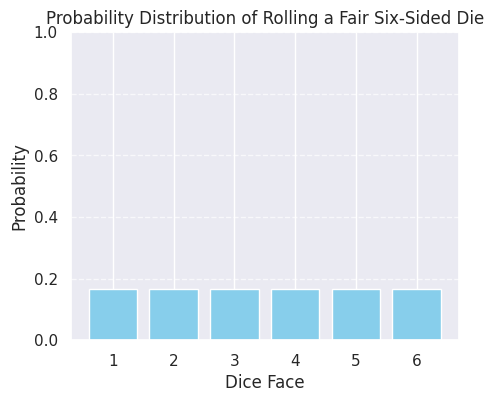

An outcome is what you get as a result of an experiment. When you roll a die, the outcome could be any one of the numbers from 1 to 6. Each roll produces one specific number, and that’s the outcome of that roll. The outcome should not be predictable with certainty before conducting the experiment. There should be an element of chance involved as mentioned above. In a probabilistic experiment, outcomes must be equally likely. This means that each outcome is just as likely to happen as any other outcome.

The idea of equally likely outcomes simplifies probability calculations and helps us understand certain types of experiments better. The experiment must have a well-defined sample space, which is the set of all possible outcomes. This helps in calculating probabilities. Additionally, the experiment should be repeatable under the same conditions. This means you should be able to perform the experiment multiple times and observe outcomes that align with the defined sample space.

An event is a collection of outcomes that you’re interested in. Let’s say you want to know the probability of rolling an even number. The event here is “rolling an even number,” which includes the outcomes 2, 4, and 6. So, the event is just a way to group together specific outcomes that match what you’re interested in. The sample space is like a complete list of all possible outcomes of an experiment. For rolling a die, the sample space includes every number the die could land on: {1, 2, 3, 4, 5, 6}. It’s the full set of all possible outcomes you could get from the experiment.

An “or” event in probability refers to the situation where either one event, the other event, or both events occur. It is also known as the union of two events. An “and” event in probability refers to the situation where both events occur simultaneously. It is also known as the intersection of two events. Disjoint events, also known as mutually exclusive events, are events that cannot occur at the same time. In other words, if one event happens, the other cannot (simultaneously).

Probabilities are proportions of a whole. Probability theory is built on a set of foundational rules, known as axioms. These axioms are basic principles that define how probabilities work. Non-Negativity: Probabilities are always positive or zero. This means that the likelihood of an event is never negative. Normalization: The total probability of all possible outcomes must add up to 1. Additivity: If you have two or more disjoint events that cannot occur at the same time, the probability of either event occurring is the sum of their individual probabilities.

Sometimes we want to know to know the probability of an event given another event has already occurred. In that case, we calculate conditional probability. Conditional probability is a measure of the probability of an event occurring, given that another event has already occurred. The conditional probability of event A given that event B has occurred, denoted as P(A|B), is defined as: P(A|B) = P(A ∩ B) / P(B). P(A∩B) is the probability that both A and B happen. All properties of probability discussed above remain valid for this as well.

Now what happens if the knowledge of B does not affect the chance of event A? In that case, A will be an independent event. This means, P(A|B) = P(A ) or P(A ∩ B) = P(A)P(B). If you are wondering if disjoint events are independent then the answer is “no”. Disjoint simply means they cannot happen simultaneously (A ∩ B is zero) . This does not mean the occurrence of one event does not affect the probability of another, if one happens the other does not.

This (A ∩ B | C) = P(A|C) P(B|C), is called conditional independence. Two events are conditionally independent given a third event if knowing whether the second event has occurred does not change the probability of the first event once the third event is known. Independence does not imply conditional independence, and vice versa.

Sometimes, we have multiple events, and if each pair of these events are independent to each other we call that pairwise independence. Pairwise independence does not imply that the events are mutually independent because the condition of independence should be satisfied for all possible intersections, including all events which may not be the case.

Now, imagine you’re trying to determine the likelihood of raining tomorrow, but you have different weather forecasts. Each forecast has its own probability of being correct and gives a different prediction for rain. Total probability theorem combines these probabilities to give an overall chance of rain by accounting for each forecast’s likelihood and its prediction. P(A) = P(A|B1) * P(B1) + P(A|B2) * P(B2) + … + P(A|Bn) * P(Bn), where Events B1, B2, B3…represent different weather forecasts and Event A is the event of it raining tomorrow.

Another important concept that you will come across is Bayes’ Rule. Conceptually, it flips the relationship between cause and effect. If we know the probability of an effect given a cause, Bayes’ rule helps us determine the probability of the cause given the effect. Let’s say we have n possible causes, H1, H2, …, Hn, and an observed effect E. The probability of Hi given E is: P(Hi | E) = (P(E | Hi) * P(Hi)) / Σ[P(E | Hj) * P(Hj)] for j = 1 to n. By combining your initial belief (prior probability) with the likelihood of observing the new evidence given your belief, Bayes’ rule provides a way to calculate your updated belief (posterior probability).

These were just the basics from your high school mathematics. Now let’s move forward.

Some Advance Basics:

While outcomes represent the possible results of an experiment, they often lack a numerical representation that’s convenient for mathematical operations and analysis in real world applications. This is where random variables come into play. A random variable is essentially a numerical representation of an outcome. It assigns a numerical value to each possible outcome of a random experiment. This transformation allows us to use mathematical tools and techniques to analyze and understand the data. So, here is how we can differentiate:

Outcomes are the fundamental results of a random experiment. For instance, when flipping a coin, the outcomes are heads or tails. Events are a collection of one or more outcomes. For example, getting a head when flipping a coin is an event. Random Variable on the another hand is a function that assigns a numerical value to each outcome. For instance, we can define a random variable X as follows: X = 1 if the outcome is heads, and X = 0 if the outcome is tails.

There are two types of random variables: Discrete(countable number of distinct values – the number of heads when flipping three coins. Possible values are 0, 1, 2, or 3) and Continuous (an infinite number of values within a given range – the height of a person, which can be any value within a certain range).

Once we’ve assigned numerical values to the outcomes of a random experiment using a random variable, we can delve deeper into understanding the likelihood of these values occurring. This is where probability distributions come into play. Essentially, it’s a function that maps the values of the random variable to their corresponding probabilities.

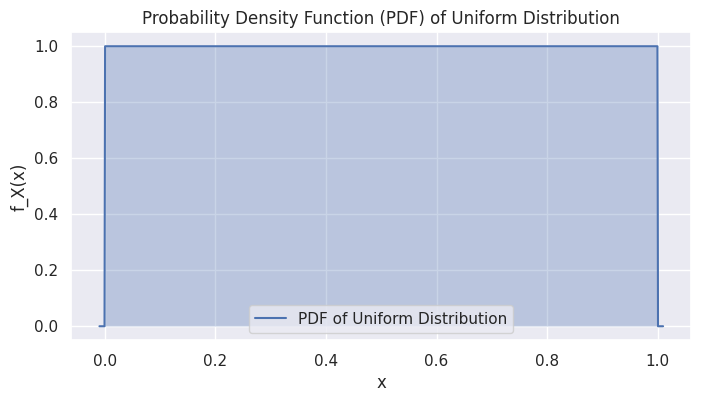

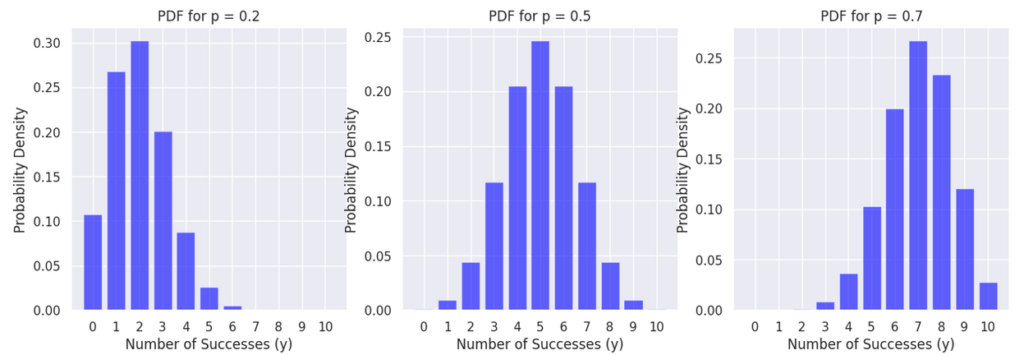

We have two main ways to describe probability distributions: Probability mass functions or PMFs for the discrete case, and PDFs or probability distribution(density) functions for the continuous random variable case. Instead of assigning probabilities to individual values, a PDF represents the probability density at a particular point. The total area under the PDF curve equals 1, indicating the total probability of all possible values. It’s important to note that the probability of a specific value for a continuous random variable is actually zero; instead, we consider probabilities over intervals.

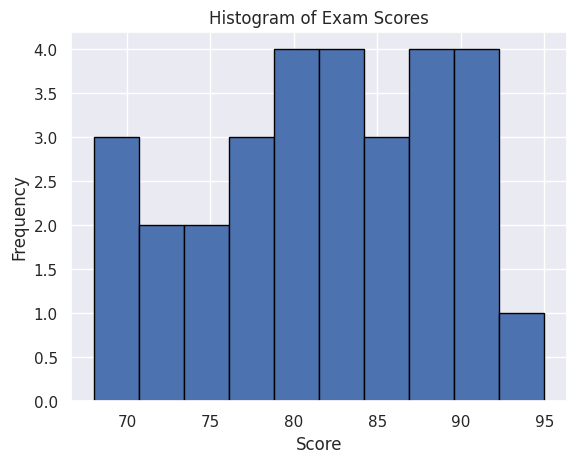

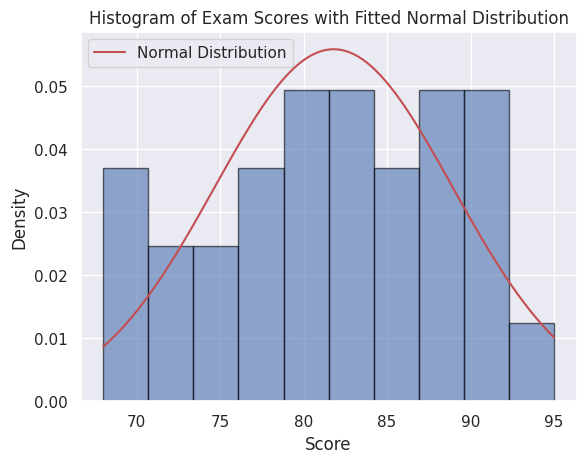

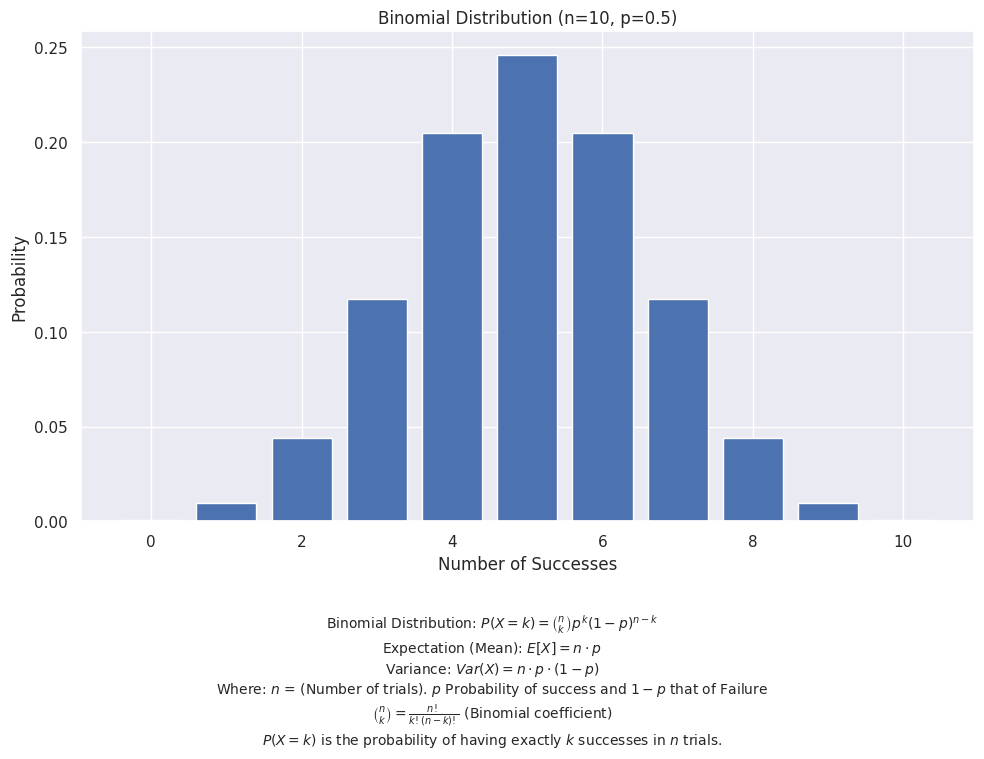

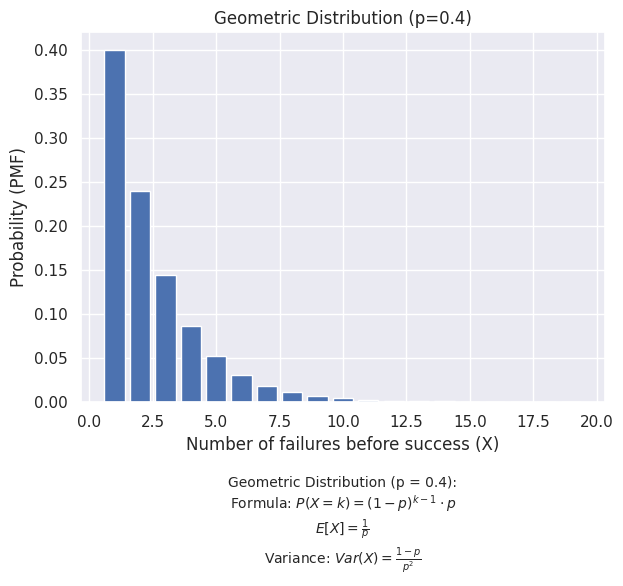

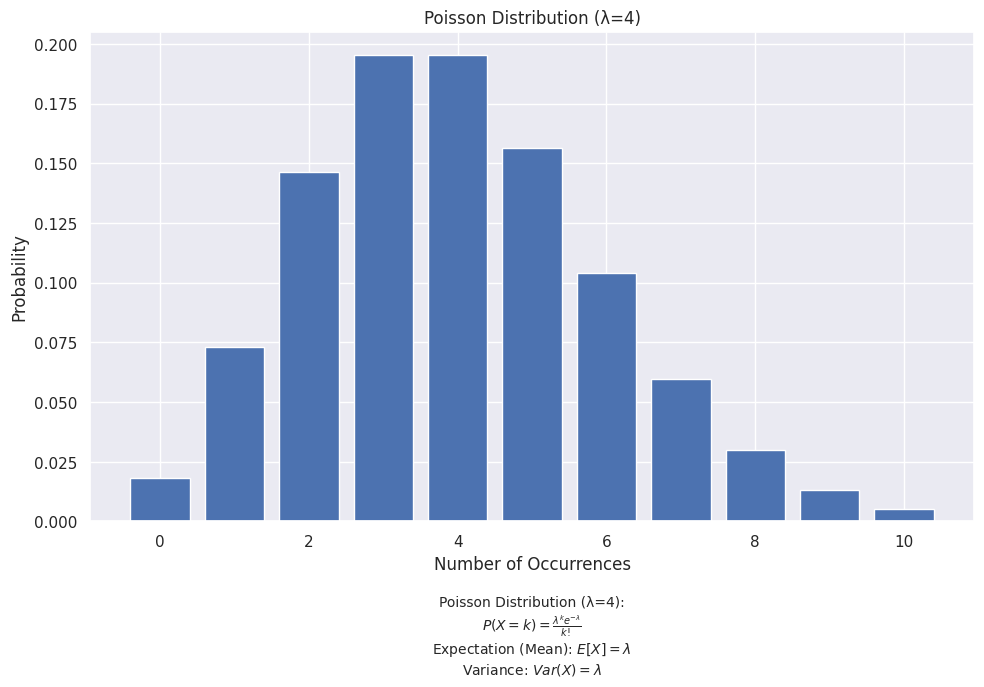

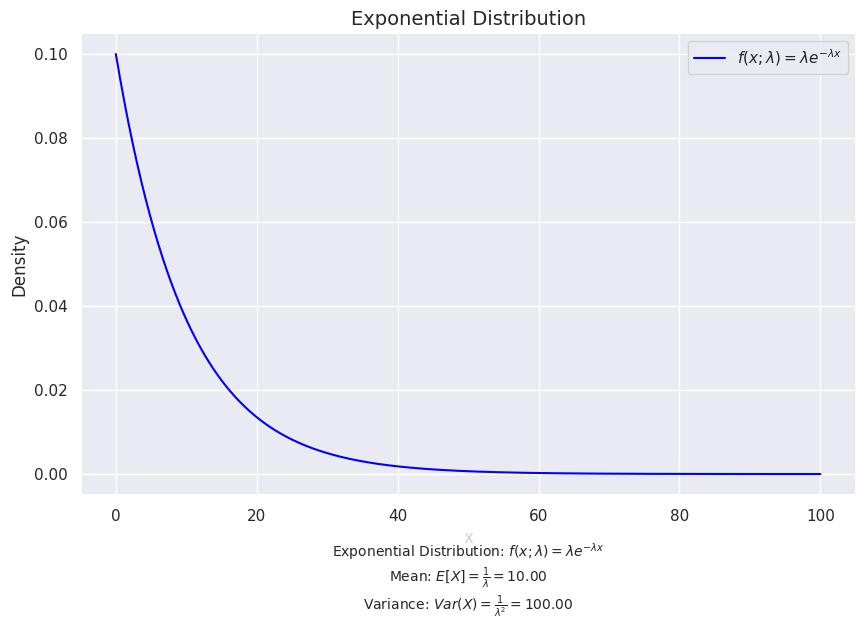

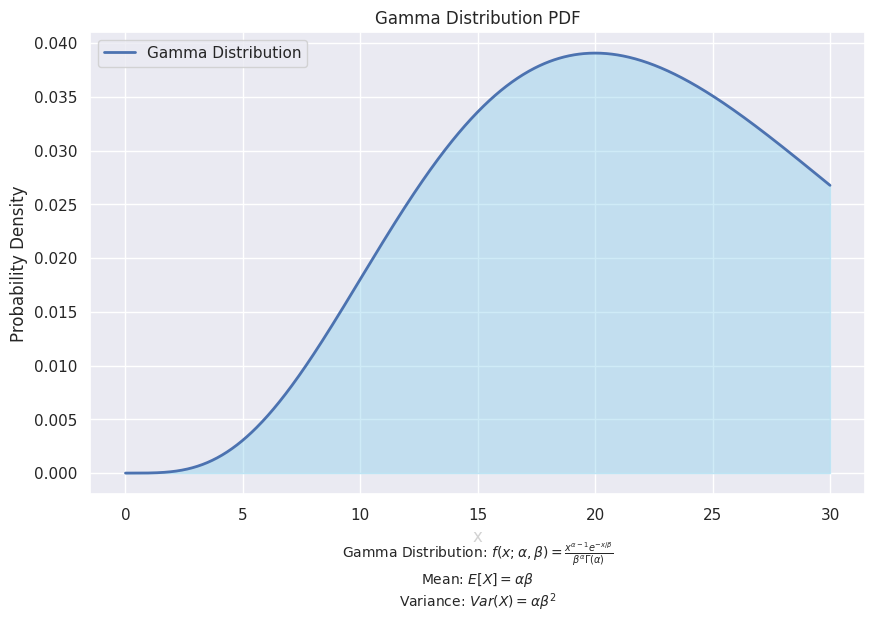

Let’s see how it looks like when plot the PMFs and PDFs:

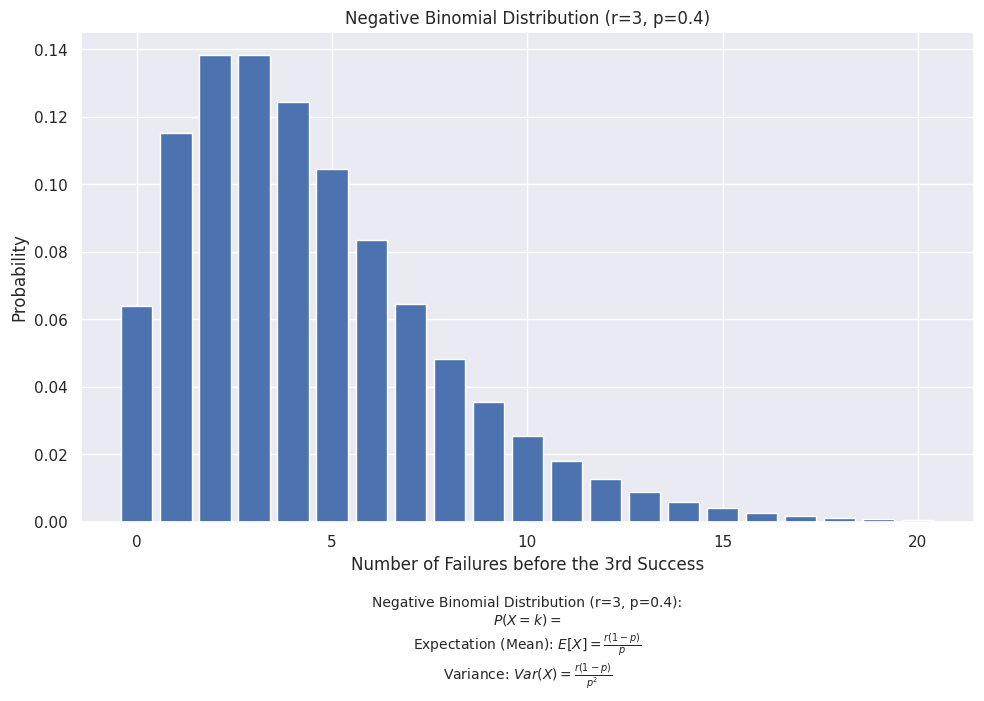

Read the captions of the above plots to get an idea of what it means to be PMFs and PDFs. Once we have the distribution of a random variable, we can employ the concepts we’ve learned to extract valuable insights or summarize the random variables. One such concept is Expectation which is similar to mean but used mostly in context of PMFs and PDFs.

Expectation (or Expected Value) is a fundamental concept that provides a measure of the “central tendency” of a random variable. It represents the average value that the random variable is expected to take if an experiment were repeated many times. In both cases (PMFs, and PDFs), the expectation is essentially a weighted average of all possible values, with the weights being the probabilities associated with those values. Similarly we can use variance etc to describe our random variable. You can find the equations here.

Now, what can we do with probability distributions? Various problems in probability tend to have a distribution that we can use to perform calculations and solve our problems.

Note that, PDFs are not the same as data distributions. PDFs don’t represent real-world data but rather an idealized or theoretical representation of how data might be distributed. Data distributions are empirical representations of how data is actually distributed in a dataset. They are derived from real-world observations and measurements. While they can often be approximated by PDFs, they are not identical. Data distributions may have irregularities, outliers, or specific patterns that don’t perfectly match any theoretical distribution.

By fitting a probability distribution to data, we construct a theoretical model that approximates the data’s underlying pattern. This model, often represented by a curve or equation, allows us to estimate probabilities, make predictions, identify anomalies, and draw statistical inferences.

Empirical data can be visualized using distributions such as Histogram, Bar Chart, etc. but there are various types of probability distributions that exist for each case (discrete and continuous) design for various types of problems. Let’s look at them one by one.

Discrete Case(PMFs) – Probability Mass Functions:

A Bernoulli trial is a simple experiment with only two possible outcomes: success or failure. Think of flipping a coin – heads could be success, and tails would be failure. Each trial is independent, meaning the outcome of one trial doesn’t affect the next. This also means the probability of success remains the same for each trial. We will use this in next few explanations. All points are valid for them as well.

The Bernoulli distribution is one of the simplest and most fundamental probability distributions. It models the outcome of a single Bernoulli trial. If you put n = 1 in the binomial distribution explain below, and you will get the Bernoulli distribution.

A binomial distribution is a probability distribution that models the number of successes in a fixed number of independent Bernoulli trials. For instance, flipping a coin ten times and counting the number of heads follows a binomial distribution. Key characteristics include a fixed number of trials (n), a constant probability of success (p) for each trial, and independent trials. The outcomes of a binomial experiment fit a binomial probability distribution.

Geometric distributions are used to model the number of trials needed to achieve the first success in a sequence of Independent Bernoulli trial. A unique property of the geometric distribution is its memorylessness. This means that the probability of the next success doesn’t depend on the number of failures that have already occurred.

This above distribution is closely related to another distribution called the negative binomial distribution, which models the number of failures before a specified number of successes occur in a sequence of Independent Bernoulli trial. Since the probability of the next success is influenced by how many successes you’ve already accumulated, it does not have memorylessness property.

The Poisson distribution models the number of events occurring in a fixed interval of time or space when these events happen independently of each other at a constant average rate.Let’s say a call center receiving incoming calls. The exact timing of each call is unpredictable, but on average,the center receives a certain number of calls per hour. This scenario is well-suited for a Poisson distribution. It models the number of events (incoming calls – even if it is rare like 2-3 calls) occurring within a fixed interval (an hour) when the events happen independently at a constant average rate. If mutations in a segment of DNA occur with an average rate of 0.5 mutations per cell, the number of mutations observed in a large sample of cells can be modeled using the Poisson distribution, despite mutations being rare events.

When the number of trials is vast and the probability of success is minuscule, the distribution of the number of successes approximates a Poisson process, where events occur independently and randomly at a constant rate. As n grows larger and p becomes smaller, with λ = n⋅p constant, the binomial distribution approximates the Poisson distribution.

The multinomial distribution is a generalization of the binomial distribution to more than two possible outcomes. It models the number of occurrences of each outcome in a fixed number of trials, where each trial results in one of several possible outcomes. A simple example is when you roll a fair die 10 times, and you want to model the number of times each of the 6 faces appears. Unfortunately, it is difficult to plot the PMFs. For more information read this.

There are other distributions too but the above mentioned distributions are the most common in discrete case. Feel free to explore further. The core thing is you just need to find a distribution that fits your problem, and understand the goal behind what it means by probability distribution.

Continuous Case(PDFs) – Probability Density Functions:

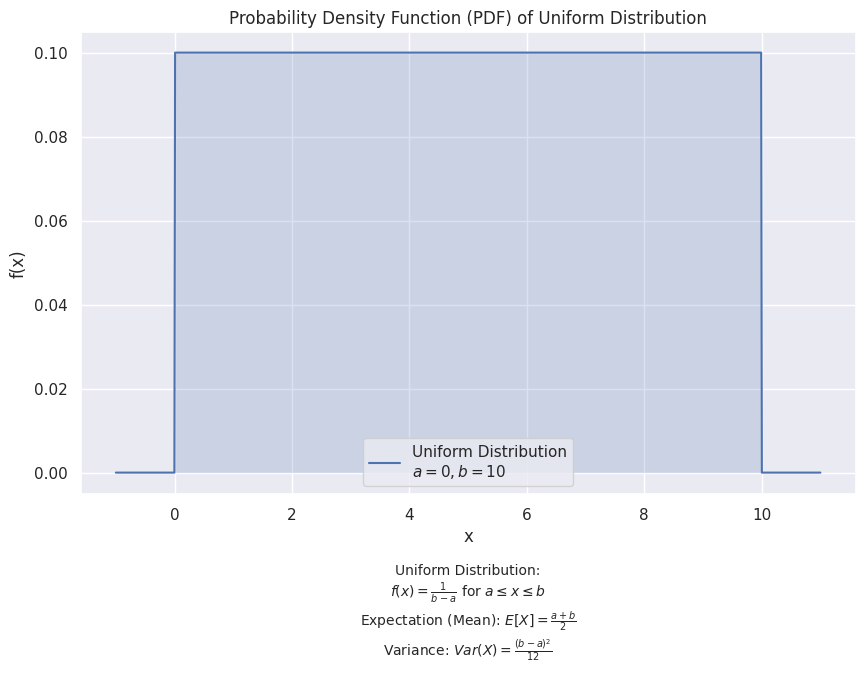

The uniform distribution is one of the simplest continuous probability distributions. It describes a situation where all outcomes in a given range are equally likely. It can be discrete as well.

The PDF f(x) gives the density of probability at a particular point x in the continuous interval. Unlike discrete distributions where the height represents the probability of a specific outcome, in continuous distributions, the height does not represent the probability directly but rather the density. The “density” at a particular point x indicates how concentrated (more likely) the probability is around that point, but it does not represent the probability of X = x directly.The total area under the curve is 1. The probability can be found by calculating the area under the curve between two points. The probability that a continuous random variable equals any specific value is zero. Instead, we talk about the probability of the variable falling within a range.

The PDF f(x) of a continuous random variable can take on values greater than 1, but this doesn’t mean that the probability at any specific point is greater than 1 because probabilities are associated with intervals, not specific points.

The exponential distribution is often used to model waiting times between independent events that happen at a constant rate. For example, it can describe the time until a radioactive particle decays, the time until a customer arrives at a service point, or the time between earthquakes in a region. A key characteristic of the exponential distribution is its memoryless property.

This means that the probability of an event occurring in the future is independent of how much time has already passed. In other words, if you’ve been waiting for a bus for 10 minutes, the probability of the bus arriving in the next 5 minutes is the same as if you had just started waiting. This property is unique to the exponential distribution and has significant implications for its applications. The exponential distribution is defined by a single parameter, lambda (λ), which represents the average rate of events. A higher value of λ indicates that events happen more frequently (shorter waiting times), while a lower λ means events occur less frequently (longer waiting times).

λ = 0.1. The curve shows the probability density, which decreases as x increases. This reflects the fact that short waiting times are more likely, while long waiting times are less likely. The mean is E[X] = 10. This represents the average time you would wait for an event (e.g., the next bus) to occur. The exponential distribution is skewed to the right, meaning it has a longer tail on the right side. This reflects the fact that while short waiting times are common, there is also a possibility of very long waiting times.

The Gamma distribution is a two-parameter family of continuous probability distributions, which generalizes the exponential distribution. It is often used to model the time until an event occurs k times (where k is not necessarily an integer). This distribution has two parameters. Shape and scale parameters. You will also come across rate parameter which is nothing but inverse of scale parameter.

The shape parameter, often denoted by α or k, controls the shape of the Gamma distribution. In the context of a Poisson process, the shape parameter of Gamma distribution can be interpreted as the number of events you are waiting for. For instance, if α=3, the Gamma distribution models the waiting time until three events have occurred. The value of α influences the skewness and mode of the distribution: If α < 1: The distribution is highly skewed to the right. If α = 1: The distribution is an exponential distribution. For α > 1, the distribution is skewed right but becomes more symmetric as α increases.

The rate parameter, denoted by λ controls the rate at which events occur in the Poisson process. It is the reciprocal of the scale parameter (θ). The rate parameter represents the average number of events per unit time. A larger λ results in a steeper, more compressed distribution (shorter waiting times), while a smaller λ results in a flatter, more spread-out distribution (longer waiting times).

The scale parameter, denoted by θ or β is the reciprocal of the rate parameter. It controls the scale or spread of the distribution. It represents average waiting time. These parameters allow the Gamma distribution to model a wide range of phenomena, from highly skewed distributions to more symmetric ones. For example, if you are monitoring a server, and the requests to the server follow a Poisson process with an average of λ = 0.2 requests per second or β = 5 . You might want to model the time until you receive 5 requests. The waiting time for these 5 requests can be modeled by a Gamma distribution with α=5 and λ=0.2.

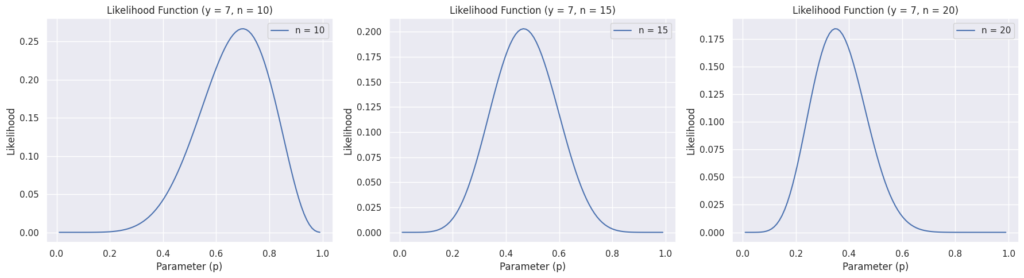

The Beta distribution is a continuous probability distribution defined on the interval [0, 1] or (0, 1). This makes it particularly useful for modeling probabilities themselves. It’s characterized by two positive shape parameters, typically denoted as α (alpha) and β (beta). These parameters control the shape of the distribution. Intuitively, the Beta distribution can be thought of as representing the distribution of probabilities. For example, if you’re uncertain about the true probability of success of a coin flip, you might model your belief about this probability using a Beta distribution.

One of the key strengths of the Beta distribution is its flexibility. By varying the values of α and β, you can create a wide range of shapes, from highly skewed distributions to nearly uniform ones. This makes it a versatile tool for modeling various phenomena. Read this for more.

A conjugate prior is a special type of prior distribution that makes this updating process mathematically convenient. It ensures that the posterior distribution belongs to the same family as the prior distribution. For the Binomial likelihood, the Beta distribution is the conjugate prior. This means that if you start with a Beta prior and update it with Binomial data, the posterior distribution will also be a Beta distribution. This property simplifies the process of updating beliefs with new data, making the Beta distribution a convenient choice for modeling probabilities in Bayesian inference.

Before we go ahead to the one of the most important PDFs used in machine learning, we should not skip a few things:

Cumulative Distribution Functions (CDFs): As you move along the x-axis (values of the random variable), the CDF tells you the accumulated probability up to that point. In other words, the value of the CDF at a particular point helps to determine the proportion of the probability distribution that lies below that point. It can exist for both Continuous and Discrete case.

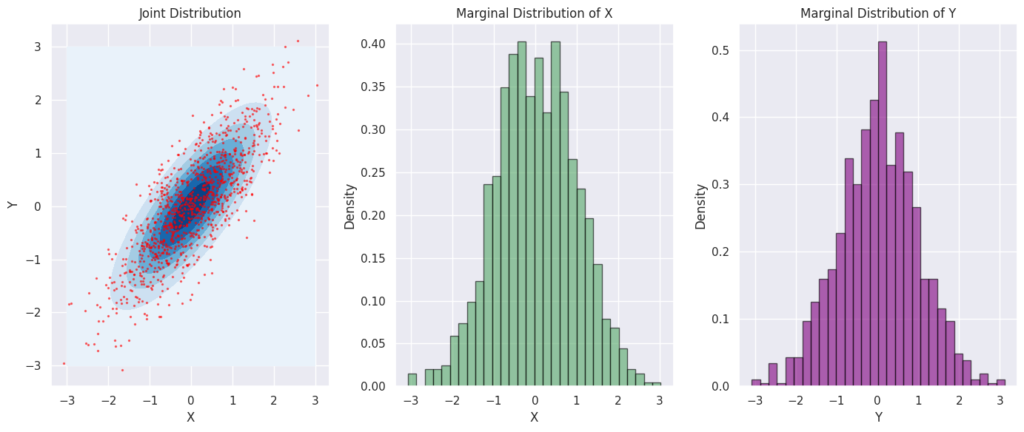

A joint distribution describes the probability of two or more events occurring simultaneously. It provides a comprehensive view of how these variables interact with each other and how their joint behavior can be quantified. For discrete random variables X and Y, the joint PMF is denoted as: P(X = x, Y = y) = f(x, y), where f(x, y) is the probability that X takes the value x and Y takes the value y simultaneously. The marginal PMF of X is obtained by summing the joint PMF over all possible values of Y: P(X = x) = Σ_y P(X = x, Y = y)

For continuous random variables X and Y, the joint PDF is denoted as: fX,Y(x,y). For continuous random variables X and Y, the probability of X falling between a and b, and Y falling between c and d is given by the double integral of the joint probability density function (PDF) over that region: P(a ≤ X ≤ b, c ≤ Y ≤ d) = ∬[a,b]x[c,d] fX,Y(x,y) dy dx. For more details read this.

The marginal distribution of a subset of variables within a joint distribution focuses on the probability distribution of those specific variables, disregarding the others. It simplifies the analysis by providing insights into the behavior of individual variables independently of their joint behavior with other variables

You will also hear about conditional distributions which describe the probability distribution of one or more random variables given that other variables are fixed at certain values. In the context of joint distributions, the conditional distribution provides insights into how one variable behaves when another variable is known or constrained to a specific value. It help understand how the distribution of one variable is influenced by the value of another variable.

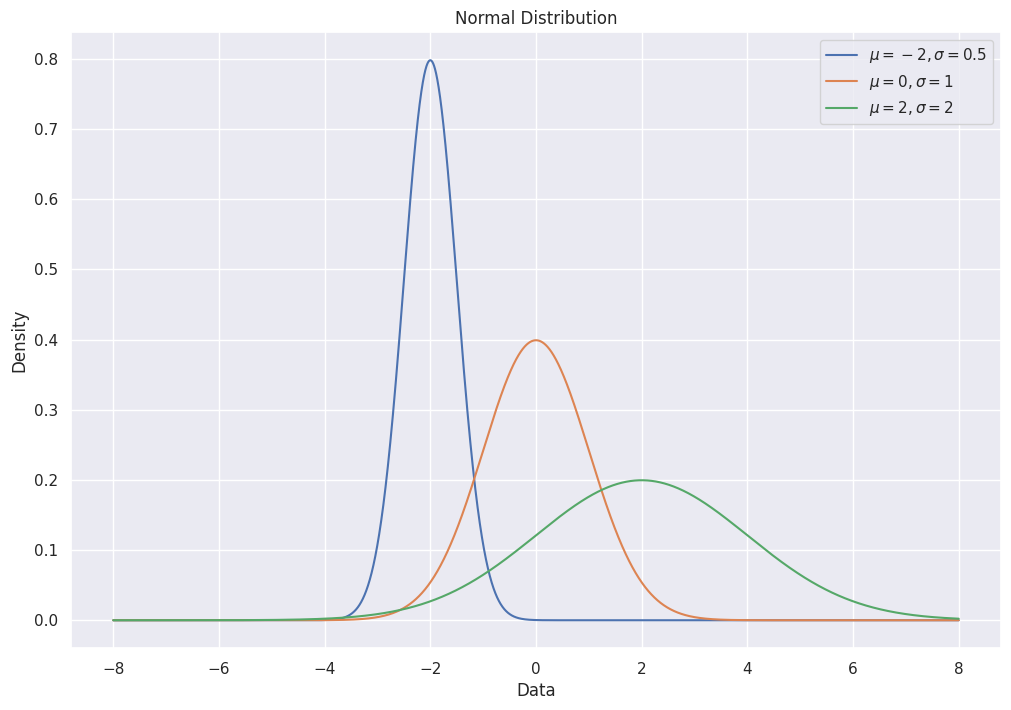

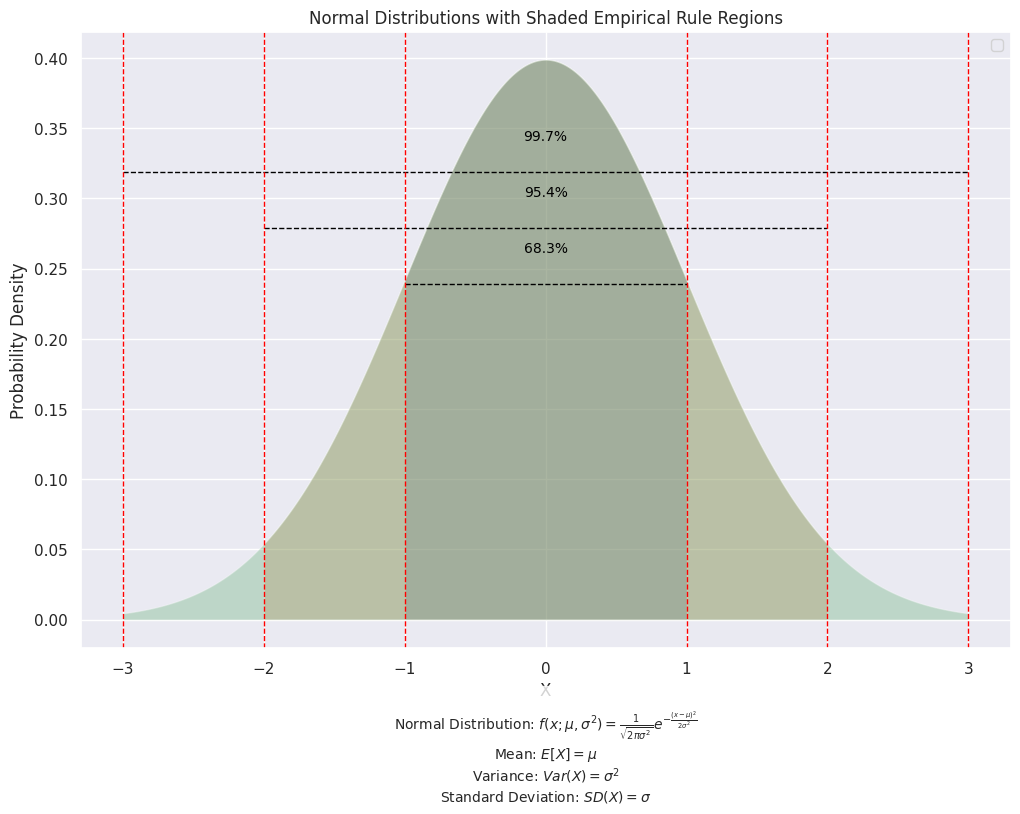

Now let’s come to the Normal Distribution or Gaussian distribution, which is the most important one for machine learning. The normal distribution is a continuous probability distribution characterized by its bell-shaped curve. It is defined by two parameters: the mean (μ) and the standard deviation (σ). The mean represents the center of the distribution, while the standard deviation determines its spread. It is symmetric around the mean and area under the curve is 1. Shifting μ to the right or left moves the entire curve without changing its shape. A larger σ results in a flatter, wider curve, while a smaller σ produces a taller, narrower curve.

Now, what if we want to compare values from two different normal distributions. In that case, we can use z-scores. A z-score, represents the number of standard deviations a data point is from the mean of its distribution. z = (x – μ) / σ, where x is a raw value. For instance, a Z-score of 2 means the data point is 2 standard deviations above the mean. Z-scores standardize data to have a mean of 0 and a standard deviation of 1. This makes it easier to compare data points from different distributions. The standard normal distribution is a special case of the normal distribution with a mean of 0 and a standard deviation of 1. The CDF of the standard normal distribution gives the probability that a normally distributed random variable is less than or equal to z.

Z-scores can help identify outliers. A data point with a Z-score greater than 3 or less than -3 is typically considered an outlier, as it lies far from the mean.

Many machine learning algorithms assume normally distributed data. Creating new features in a dataset based on normal distributions can improve model performance. Algorithms like Linear Regression, Logistic Regression, and Gaussian Naive Bayes often rely on the assumption of normally distributed data or error. Gaussian Mixture Models (GMMs) use multiple normal distributions to model complex data distributions. Deviations from normality can indicate outliers or anomalies in the data. When evaluating machine learning models, performance metrics (such as accuracy or error rates) can be analyzed using normal distribution assumptions to understand variability and confidence intervals.

The Central Limit Theorem:

In machine learning, you will often hear the term IID which means Independent and Identically Distributed. Independent means, the observations or data points are not correlated or connected to each other. Identically distributed means that all observations come from the same probability distribution.

Suppose you’re training a machine learning model to classify emails as spam or not spam. You collect a dataset of 1,000 emails, and you label each one as either spam or not spam. If these emails are IID, it means: Whether one email is spam or not does not affect whether another email is spam. All emails are drawn from the same population, meaning the process that generates these emails (and their labels) is consistent throughout the dataset.

When training a model, the IID assumption ensures that the data you’re using to train the model is representative of the underlying process you want to model. This helps in generalizing from the training data to unseen data. The IID assumption is crucial for making valid statistical inferences. It allows for the use of central limit theorems, which underpin the construction of confidence intervals and hypothesis tests. The IID assumption helps in estimating the model’s performance on unseen data. For example, cross-validation assumes that the training and validation sets are IID, ensuring that performance estimates are unbiased.

If the data is not identically distributed (e.g., if the training data comes from a different distribution than the test data), the model’s estimates can be biased. This might lead to poor generalization on new data. If the data points are not independent, the model might overfit or underfit.

Now let’s consider an example, Imagine you love coffee and every morning, you buy a cup from your favorite coffee shop. You’ve noticed that the time it takes you to get your coffee varies a bit every day, sometimes it’s 3 minutes, sometimes 5 minutes, but it’s always around 4 minutes on average. Now, let’s say you start timing how long it takes every day. On day one, it takes 4.5 minutes. On day two, it’s 3.8 minutes. You keep recording, and over time, as you gather more and more data, the average of all your recorded times starts getting closer and closer to that 4-minute mark.

This is what we call convergence—as you collect more observations (more days), the average time it takes to get your coffee converges to the true average (in this case, 4 minutes). Now suppose you only recorded the time for 5 days. The average might be a bit off—maybe 4.2 minutes or 3.9 minutes. But what if you recorded it every day for a whole year? After 365 days, you would expect the average time to be very close to 4 minutes. This is the Law of Large Numbers (LLN) in action. The LLN tells us that as the number of observations increases, the average of those observations will get closer to the true average.

There are two types of LLN:

- Weak Law of Large Numbers (WLLN): This version assures you that if you keep recording, the average will eventually be very close to 4 minutes, but not necessarily right away. It might still fluctuate a bit as you keep counting.

- Strong Law of Large Numbers (SLLN): This is an even stronger statement, saying that if you could keep recording forever (infinite days), the average would not just get close to 4 minutes—it would actually settle down exactly at 4 minutes and stay there.

The above explanation however is too oversimplified.

The LLN is why we trust averages. Even if you see some variation in the short term, in the long run, you can expect the average to represent the true value accurately. The SLLN states that the sample average will almost surely converge to the expected value as the sample size approaches infinity. In practical terms, this means that the probability of the sample average not converging to the true mean is zero.

Let’s take another example for this, let’s say you’re flipping a fair coin. You know that in theory, you should get heads 50% of the time. But if you flip it only 10 times, you might get 7 heads and 3 tails, which is 70% heads. That seems far from 50%! However, the LLN tells us that if you keep flipping that coin many, many times – let’s say thousands or even millions of times – the percentage of heads you get will get closer and closer to 50%. It’s like the more data you collect, the more accurate your results become. The Weak Law says that as you increase your number of coin flips, the chance of your result being far from 50% gets smaller and smaller. The Strong Law makes an even bolder claim. It says that if you could flip the coin infinitely many times, your result would definitely settle exactly at 50%.

I have provided some mathematical equations here but you can refer to this resource or this one for more rigorous understanding.

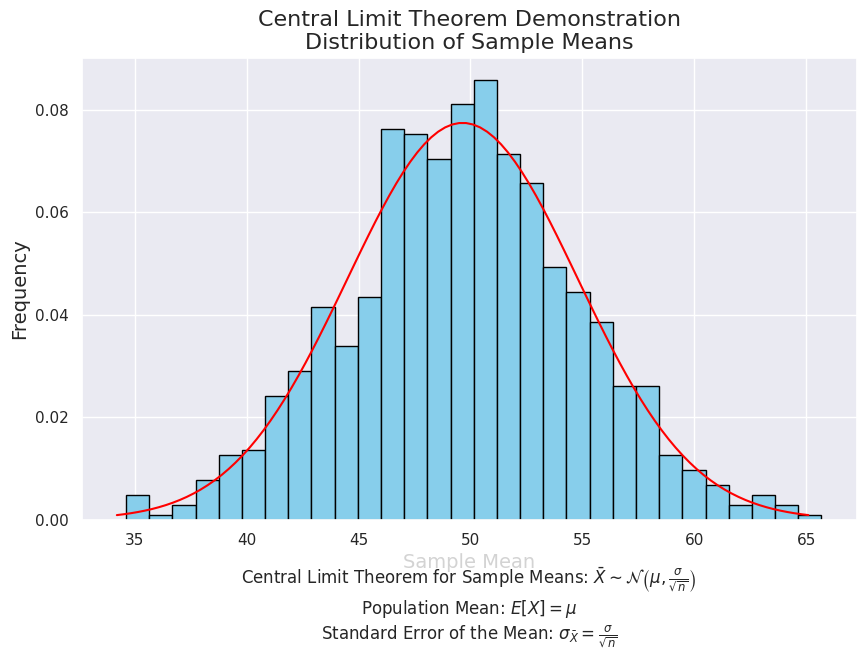

Now, let’s come back to the original example and say you’ve got the average time it takes to get your coffee (4 minutes), but you’re also curious about the distribution of all those times you recorded. Maybe most days it’s around 4 minutes, but sometimes it’s much quicker, and occasionally it takes much longer. Here’s where the Central Limit Theorem (CLT) comes in.

CLT tells us that if you were to take lots of samples (like averaging the times over different weeks), the distribution of those sample averages would form a bell-shaped curve, known as a normal distribution, regardless of how the original times are distributed. For example, you randomly pick 30 days out of the year, calculate the average time it took to get coffee on those days, and then repeat this process many times. The CLT tells us that the histogram of these averages would look like a bell curve centered around 4 minutes.

The CLT is why the normal distribution (the bell curve) is so common in statistics and machine learning. Even if the data itself isn’t normally distributed, the averages of samples taken from that data often are. This is why normal distribution assumptions are often reasonable in real-world applications.

and calculate their means, these sample means form a new distribution called the sampling distribution of the mean which describes the distribution of these sample means. The mean of the sampling distribution of the mean is the same as the mean of the original distribution. The variance of the sampling distribution of the mean is the variance of the original distribution divided by the sample size n. The standard deviation of the sampling distribution of the mean, called the standard error of the mean (SEM), is the square root of the variance. This is a measure of how much the sample mean is expected to vary from the population mean.

You just need sufficient number of samples each of size typically greater than or equal to 30 to see this in practice.

Confidence Intervals And Hypothesis Testing:

Now that we’ve discussed the Central Limit Theorem (CLT), let’s discuss the concept of confidence intervals, which is closely related. The Central Limit Theorem tells us that the distribution of sample means will approximate a normal distribution as the sample size grows, regardless of the population’s distribution. This powerful result allows us to make inferences about population parameters, such as the mean, using sample data. This is called statistical inference which provides methods for drawing conclusions about a population from sample data.

A confidence interval is a range of values, derived from a sample, that is likely to contain the population parameter (like the population mean) with a certain level of confidence. In other words, it’s a way of saying, “We are X% confident that the true population mean lies within this interval.” For example, suppose we calculate a 95% confidence interval for the mean height of adult males in a certain region. If the interval is [170 cm, 180 cm], we are saying that we are 95% confident that the true mean height of all adult males in that region lies between 170 cm and 180 cm.

Confidence Level is the probability that the confidence interval actually contains the true population parameter. Common confidence levels are 90%, 95%, and 99%. It’s crucial to understand that a confidence interval is not a probability statement about the population parameter itself. Instead, it reflects the reliability of the estimation process. If we were to repeat the sampling process many times, we would expect about 95% of the calculated confidence intervals to contain the true population mean.

Let’s understand this using an example. Let’s say you’re trying to estimate the average height of adult women in a city. You randomly select 100 adult women from the city and measure their heights. You find the average height of these 100 women and calculate the standard deviation. Using the sample mean, standard deviation, sample size, and desired confidence level (e.g., 95%), you calculate the confidence interval. This gives you a range of values where you believe the true population mean height lies. If you repeated this process many times (i.e., took many different samples of 100 women), you would find that approximately 95% of the confidence intervals you construct would contain the true average height of all adult women in the city.

Confidence is about the interval, not the population parameter: We are confident that the process of constructing confidence intervals will capture the true population parameter 95% of the time. Each individual interval either contains the true mean or it doesn’t. We don’t know for sure whether a specific interval captures the true mean. Increasing the sample size generally leads to narrower confidence intervals, providing a more precise estimate of the population parameter.

In practice, we usually only have one sample, and from that single sample (it’s often impractical in real-world scenarios due to time, cost, and resource constraints to deal with multiple samples that’s why we should try our best to get a sample that is representative of the population), we estimate the population parameter. The confidence interval accounts for the fact that our estimate is based on just one sample, so it gives us a range where the true parameter is likely to be.

The standard error is a measure of how much the sample mean is likely to vary from the true population mean. It quantifies the sampling error. Standard Error (SE) = Population Standard Deviation (σ) / Square Root of Sample Size (n). A larger sample size reduces the standard error, meaning the sample mean is more likely to be closer to the population mean. A larger population standard deviation increases the standard error, indicating more variability in the data. If you’re measuring the heights of adults, the standard error of the mean height would be smaller for a sample of 1000 people than for a sample of 100 people.

Now we wil use the concept from CLT. In practice, you usually take just one sample, and you calculate one sample mean. Even though you have just one sample, the CLT allows you to use the properties of the normal distribution to create a confidence interval around the sample mean.

The CLT states that as the sample size increases, the distribution of the sample mean (x̄) approaches a normal distribution, even if the original population distribution is not normal. For large sample sizes (typically n>30), the sampling distribution of the sample mean is approximately normal, regardless of the population distribution. The Z-score is a value that tells us how many standard deviations a particular data point (or, in this case, the sample mean) is from the mean of a standard normal distribution (which has a mean of 0 and a standard deviation of 1). The Z-score is used because it allows us to standardize our data, making it easier to calculate probabilities and confidence intervals. For example, a Z-score of 1.96 corresponds to a 95% confidence level, meaning that 95% of the values lie within 1.96 standard deviations from the mean in a standard normal distribution. Similarly, a Z-score of 2.58 corresponds to a 99% confidence level.

By multiplying the standard error by the critical value (in our case Z-score) which helps define the width of the confidence interval, we determine the margin of error. This margin is then added and subtracted from the sample mean to create the confidence interval.

The margin of error determines the width of a confidence interval. It’s the amount added and subtracted from the sample mean to create the interval’s upper and lower bounds. Margin of Error (ME) = Critical Value * Standard Error. A larger margin of error means a wider confidence interval, indicating more uncertainty. A smaller margin of error means a narrower confidence interval, indicating less uncertainty. If you want a 95% confidence interval and have a standard error of 2, and the critical value (z-score) is 1.96, the margin of error would be 1.96 * 2 = 3.92.

Now, let’s see how we can calculate confidence interval:

The first step is to decide how confident you want to be that your interval contains the true population parameter (e.g., the population mean). Common choices are 90%, 95%, and 99%. The confidence level determines the critical value you’ll use. Next, calculate the mean of your sample. This is your best estimate of the population mean. Calculate the standard error which gives you a sense of how much the sample mean might vary from the population mean. Look up the critical value for your chosen confidence level in their respective table. Calculate margin of error which is found by multiplying the critical value by the standard error. Finally, add and subtract the margin of error from the sample mean to get the confidence interval.

Example 1: In this example, we are assuming that we know the population standard deviation but in most real world scenario we don’t know this. When we know the standard deviation of population we assume normality.

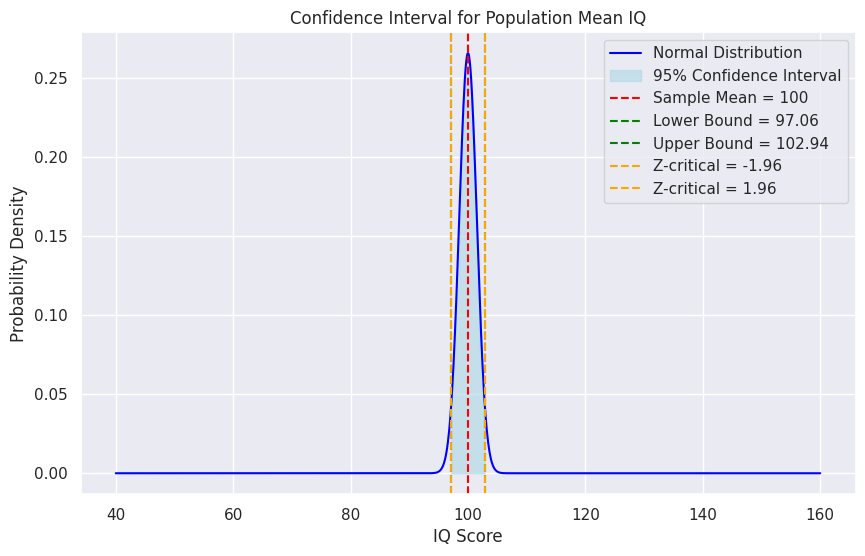

A researcher wants to estimate the average IQ of adults in a certain population.

A random sample of 100 adults is selected, and their IQ scores are measured.

The sample mean IQ is found to be 100, and the population standard deviation is known to be 15.

Construct a 95% confidence interval for the population mean IQ.

Given:

Sample mean (x̄) = 100

Population standard deviation (σ) = 15

Sample size (n) = 100

Confidence level = 95%

Steps:

1. Find the z-score: For a 95% confidence level, the z-score is 1.96.

2. Calculate the standard error:SE = σ / √n = 15 / √100 = 1.5

3. Calculate the margin of error (E):E = z * SE = 1.96 * 1.5 = 2.94

4. Construct the confidence interval:

Lower limit = x̄ - E = 100 - 2.94 = 97.06

Upper limit = x̄ + E = 100 + 2.94 = 102.94

Interpretation:

We are 95% confident that the true population mean IQ lies between 97.06 and 102.94.Code language: JavaScript (javascript)

Example 2: We can also find sample size for a population Mean

Let's say we want to estimate the average height of adult males in a city with a 95% confidence interval

and a margin of error of 2 inches.Assuming a population standard deviation of 3 inches,

we can use the following formula: n = (Z * σ / E)^2

n is the sample size

Z is the z-score for the desired confidence level (1.96 for 95%)

σ is the population standard deviation

E is the margin of error

n = (1.96 * 3 / 2)^2 = 8.4649

We would round up to 9, meaning we need a sample size of 9 males to achieve the desired level of precision.

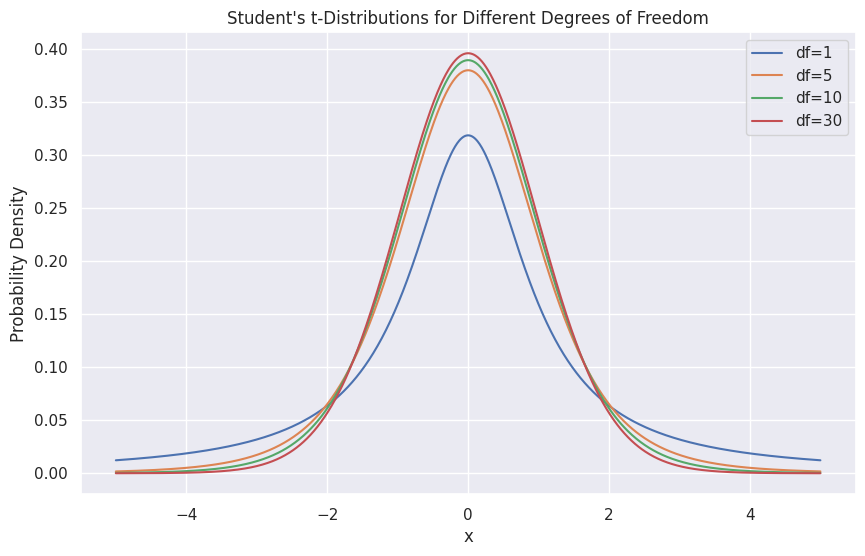

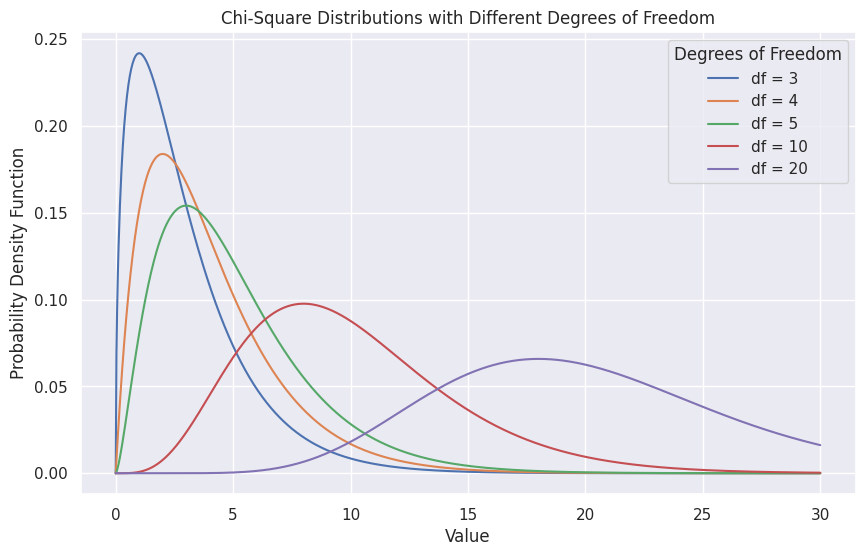

In real life you may also need to consider other factors instead of relying on the formula alone. When sample size is too small, we use t-distribution, instead of normal distribution especially when population standard deviation is not known. Even if you have bigger sample size but population standard deviation is not know then use t-distribution. The t-distribution is similar to the standard normal distribution but has fatter tails. This means it accounts for more variability and is more robust to outliers or deviations in small samples.

The shape of the t-distribution is determined by its degrees of freedom, which is equal to the sample size minus one (n-1). As the sample size increases, the t-distribution approaches the normal distribution. This is because with larger sample sizes, the estimate of the population standard deviation becomes more reliable. For very large sample sizes, the difference between the t-distribution and normal distribution becomes negligible.

When calculating confidence intervals, if the population standard deviation (σ) is known, we use the normal distribution and standard normal critical values (z). However, if σ is unknown, we estimate it using the sample standard deviation (s) and use the t-distribution instead, also when sample size is very small. In this case, the confidence interval is calculated by first computing the standard error (SE) as SE = s / √n. We then find t-scores or t-critical values using the degrees of freedom and the confidence interval. in the above example, you simply have to replace these values. You can also use confidence interval to calculate population proportions. The concept is the same. Feel free to read about the same.

Here is a function to calculate the confidence interval for both the cases:

import numpy as np

from scipy import stats

def confidence_interval(data, confidence_level, distribution = "t-distribution"):

mean = np.mean(data)

# standard error of mean

sem = stats.sem(data)

confidence_level = confidence_level/100 # to convert to decimal format

degrees_of_freedom = len(data) - 1

cumulative_probability = (1 + confidence_level) / 2

if distribution == "t-distribution":

critical_value = stats.t.ppf(cumulative_probability, degrees_of_freedom)

elif distribution == "normal":

critical_value = stats.norm.ppf(cumulative_probability)

else:

raise ValueError("Unsupported distribution. Choose 't-distribution' or 'normal'.")

margin_of_error = critical_value * sem

lower = mean - margin_of_error

upper = mean + margin_of_error

return float(round(lower, 2)), float(round(upper, 2))

if __name__ == "__main__":

data = np.array([10, 12, 14, 16, 18, 20, 22])

ci_normal = confidence_interval(data, 95, distribution="normal")

ci_t_distribution = confidence_interval(data, 95, distribution="t-distribution")

#ci_other = confidence_interval(data, 99, distribution="other") # willraise ValueError

# Print results

print("Confidence Interval using Normal Distribution:", ci_normal)

print("Confidence Interval using T-Distribution:", ci_t_distribution)

#print("Result for Unsupported Distribution:", ci_other)

Code language: Python (python)In machine learning you can use confidence interval to understand the variability of evaluation metrics across different subsets of data. Confidence intervals can be used to quantify the uncertainty of predictions made by a model. This is particularly useful in regression tasks where you want to provide a range of likely values for a prediction. In cross-validation, confidence intervals can help to understand the variability of model performance metrics across different folds of the data.

Building on the idea of confidence intervals, which give us a range of values where we think a population parameter might fall, hypothesis testing comes into play when we want to make decisions or draw conclusions based on data. While a confidence interval tells us how uncertain we are about an estimate, hypothesis testing helps us decide whether the evidence in our data is strong enough to support a specific claim about the population.

Let’s say you’re thinking about buying a house and are curious about whether the property value in the area is really increasing, as some people say.

- Confidence Interval: You gather data on recent sales in the neighborhood and find that the average increase in property values over the past year is between $10,000 and $30,000. This confidence interval gives you an idea of how much home prices might have risen, with some uncertainty.

- Hypothesis Testing: Now, you want to be sure that this increase isn’t just due to random fluctuations in the market. Hypothesis testing helps you figure out if the observed increase is significant or if it might just be a temporary blip. You might test the hypothesis that there has been no real increase in property values and see if your data strongly suggests otherwise. If the test supports the idea that property values are genuinely rising, you might feel more confident in your decision to buy.

But how can we calculate it? In order to do that, we need to understand a few things. We start with two contradicting hypotheses. We then gather the data to check if it offers enough evidence to reject one of the hypotheses. Let’s understand these two Hypotheses.

First is null hypothesis (H0). The null hypothesis is like the “status quo” or the default assumption that nothing has changed or that there’s no effect. If you’re trying a new coffee recipe, and you want to know if it really tastes better than your usual one. The null hypothesis is the assumption that the new recipe tastes exactly the same as your old one. In other words, nothing special is happening—everything is as it has always been.

The second is alternative hypothesis(Ha). It is the idea that challenges the status quo. It’s what you’re trying to prove or support with evidence. In the coffee example, the alternative hypothesis would be that the new recipe actually does taste better. It’s the claim that something is different or has changed for the better.

When you perform a hypothesis test, you start by assuming the null hypothesis is true (the new coffee is no better than the old one). You then gather evidence (like taste-test results) to see if there’s enough proof to reject the null hypothesis in favor of the alternative hypothesis (that the new coffee is better). If your evidence is strong enough, you reject the null hypothesis and accept the alternative hypothesis, meaning you believe the new coffee is indeed tastier. If the evidence isn’t strong enough, you stick with the null hypothesis, concluding that the new coffee might not be better after all.

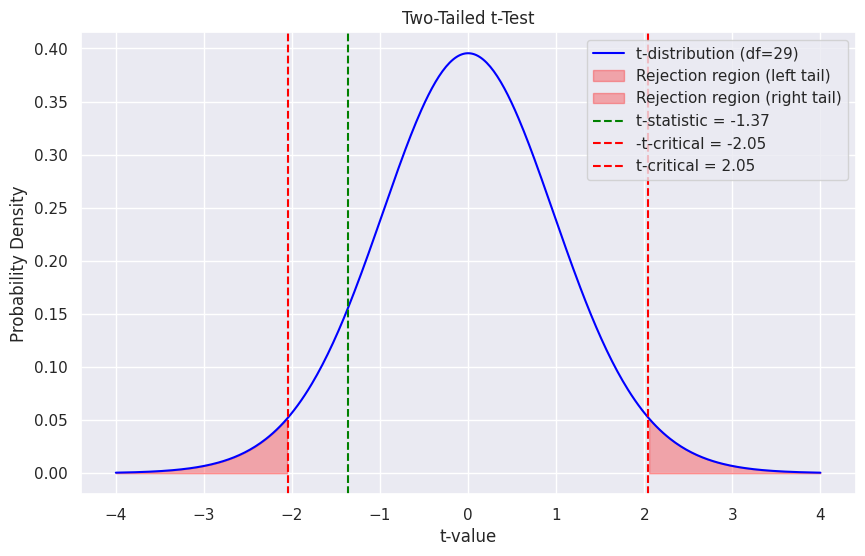

Once you have determined that your data supports one of these you make a decision in terms of whether to reject the null hypothesis or not. In hypothesis testing, we use different distributions depending on the specifics of our data and what we know about the population. When testing a single population mean, we either use a normal distribution or a Student’s t-distribution.

If we know the population standard deviation and the sample size is large enough, we use the normal distribution (often called a z-test). This is because, with a large sample size, the sample mean tends to be normally distributed, even if the population distribution itself isn’t perfectly normal. However, if the population standard deviation is unknown and we’re working with a smaller sample size, we use the Student’s t-distribution (called t-test).

For large samples (typically, n > 30 is considered sufficient), using the z-test is generally appropriate, even if the population standard deviation is unknown. The z-test and the t-test will yield very similar results in this case because the t-distribution approaches the normal distribution as the sample size increases. When dealing with large sample sizes, hypothesis testing becomes robust to deviations from normality in the population distribution. This means you can confidently use the normal distribution for inference as long as your sample size is large enough.

One of the key concepts in hypothesis testing is the level of significance, which is closely tied to the idea of confidence intervals. When we perform hypothesis testing, we’re often trying to determine whether the sample data provides enough evidence to reject a null hypothesis in favor of an alternative hypothesis. The level of significance, denoted by α, is a crucial component of this process. It represents the threshold at which we decide whether the evidence is strong enough to reject the null hypothesis. In simpler terms, the level of significance is the probability of making a Type I error, which occurs when we reject the null hypothesis even though it is actually true. For example, if we set α = 0.05, we are accepting a 5% chance of incorrectly rejecting the null hypothesis.

The level of significance is closely related to the confidence level used in confidence intervals. For instance, a 95% confidence interval corresponds to a 5% level of significance. This means that if we were to construct a 95% confidence interval for a population parameter and then conduct a two-tailed hypothesis test at a 5% significance level, the hypothesis test would reject the null hypothesis if the value specified by the null hypothesis falls outside of the confidence interval.

Another important concept is p-value. The p-value is a probability that measures the strength of the evidence against the null hypothesis. It represents the probability of obtaining a test statistic at least as extreme as the one observed, assuming that the null hypothesis is true. In simpler terms, the p-value tells us how likely our sample data would be if the null hypothesis were correct.

If the p-value is less than the significance level (p < α), we reject the null hypothesis. This means the evidence is strong enough to conclude that something other than chance is at play. If the p-value is greater than the significance level (p > α), we fail to reject the null hypothesis. This means the evidence is not strong enough to conclude that something other than chance is at play.

Example 3: Hypothesis testing

Imagine you are a data analyst at a company that runs a website.

The company claims that the average session duration on their website is 15 minutes.

However, you suspect that the average session duration might actually be different from 15 minutes.

To test this, you collect a random sample of session durations.

You start by setting up your null and alternative hypotheses:

NullHypothesis (H<sub>0</sub>): The average session duration is 15 minutes.

Mathematically, this is expressed as H<sub>0</sub>:μ =15 minutes.

Alternative Hypothesis (H<sub>a</sub>): The average session duration is not 15 minutes.

This is expressed as H<sub>a</sub>:μ≠15 minutes.

Choose a significance level:

You decide to use a significance level of α =0.05

This means you are willing to accept a 5% chance of rejecting the

null hypothesis when it is actually true (Type I error).

You collect data from a random sample of 30 sessions on the website.

After recording the session durations, you find that the sample has the following characteristics:

Sample size (n): 30

Sample mean: 14.5 minutes

Sample standard deviation (s): 2 minutes

Since the population standard deviation is unknown and the

sample size is relatively small, you use a t-test to compare the sample mean to the hypothesized population mean.

You can find the value of test statistics using the formula discussed earlier.

To find the p-value, you need to look up the t-value in a t-distribution table. Make sure to find degrees of freedom.

p-value will come out to be 0.18 (2 twice the value as it is two tailed.Check below) which is more than the value of alpha.

You compare the p-value to your significance level.

Since the p-value is greater than 0.05, you fail to reject the null hypothesis.

Failing to reject the null hypothesis means that there is not enough

evidence to support the claim that the average session duration is different from 15 minutes.

In other words, based on this sample, you do not have sufficient evidence to conclude that the

true average session duration is different from 15 minutesCode language: HTML, XML (xml)

While performing the hypothesis testing you will have a few situations:

- You are interested in whether the parameter is either greater than or less than a certain value. In this case, the rejection region is located on the both end of the graph, and it is called two-tailed test. This means you’re looking at the possibility of an extreme outcome on either end of the distribution, which requires you to split your significance level (e.g., 5%) between both tails (2.5% in each tail). For example, if you’re testing whether a new drug has a different effect from the existing drug, you would use a two-tailed test because you want to know if the new drug is either more or less effective than the current one. In this case, the null hypothesis might state that the effectiveness of both drugs is the same, while the alternative hypothesis would state that the effectiveness is different, without specifying a direction.